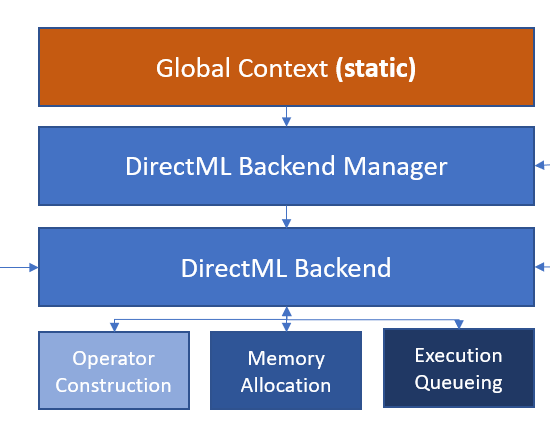

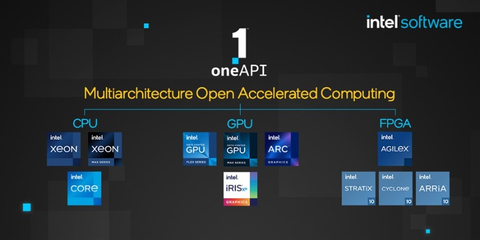

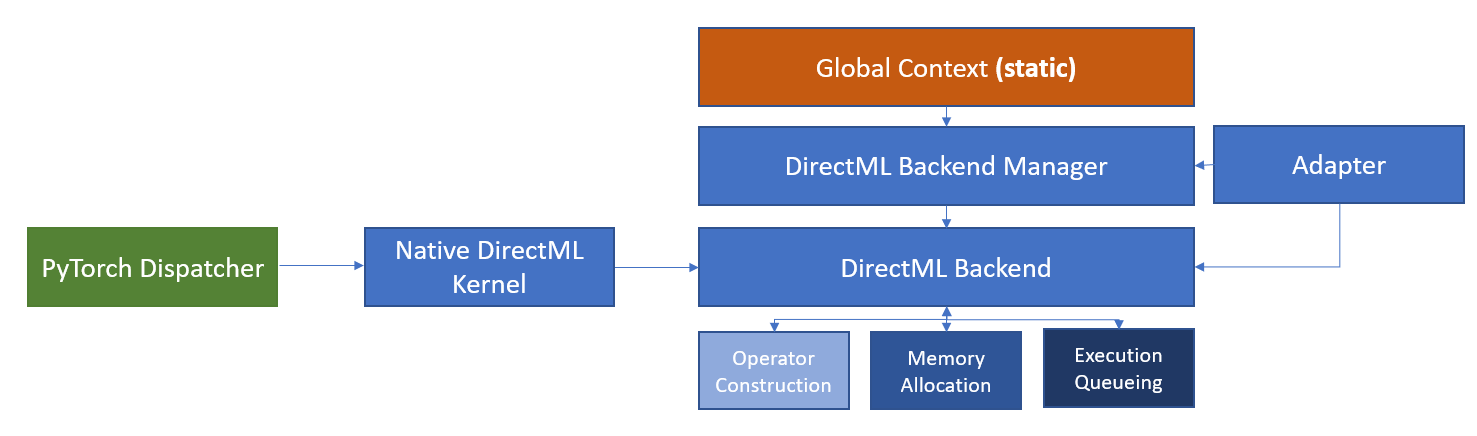

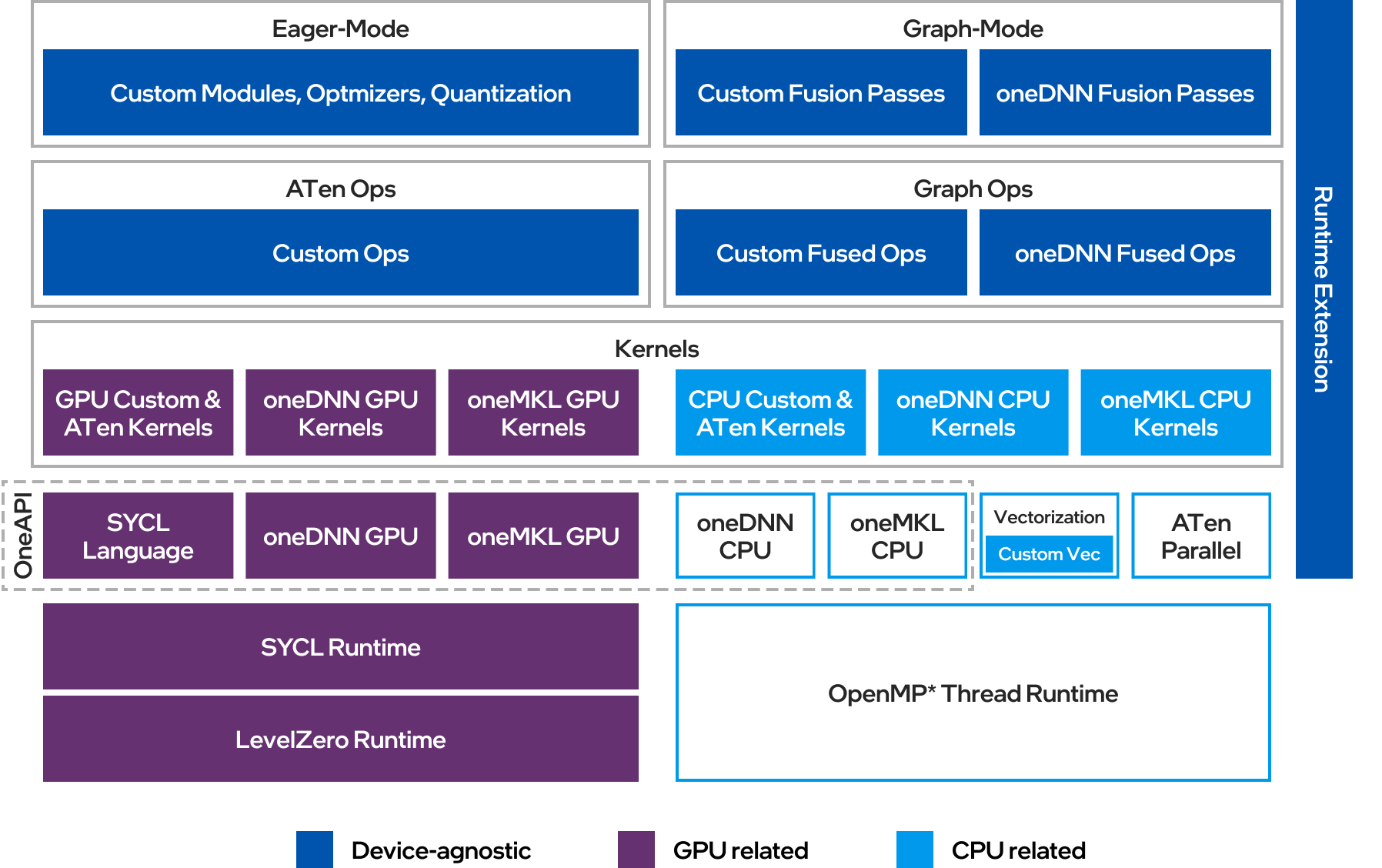

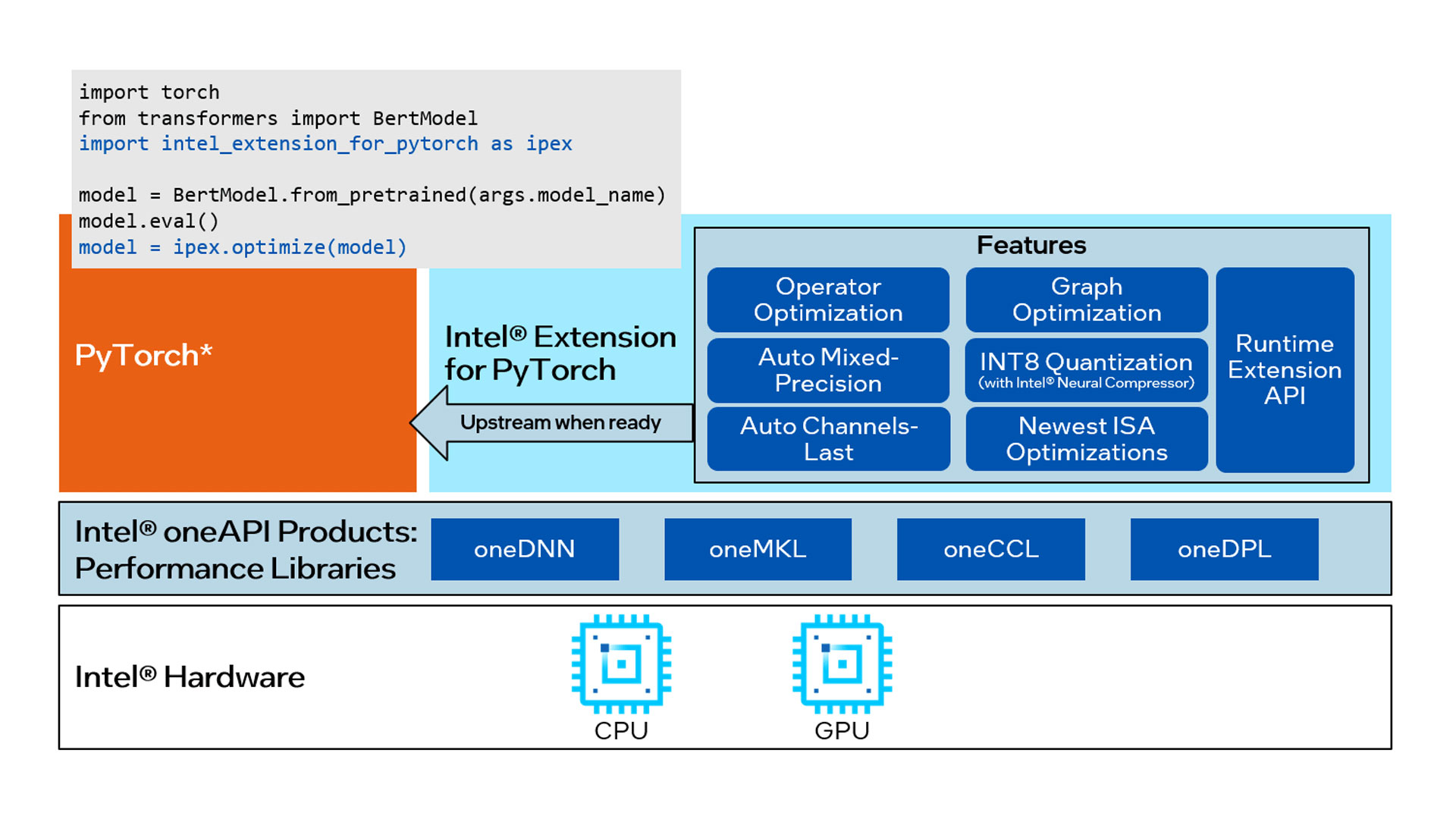

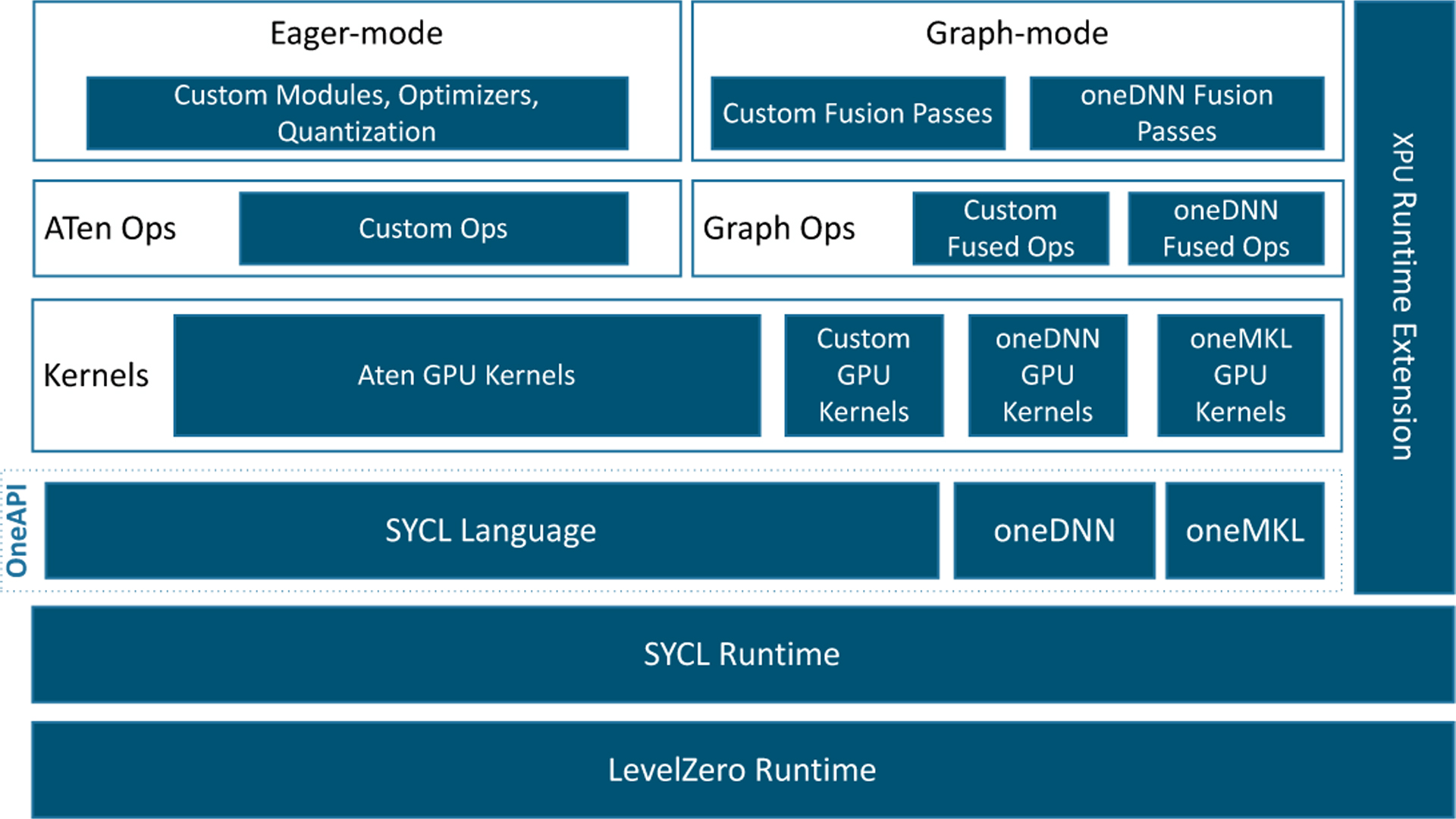

GitHub - intel/intel-extension-for-pytorch: A Python package for extending the official PyTorch that can easily obtain performance on Intel platform

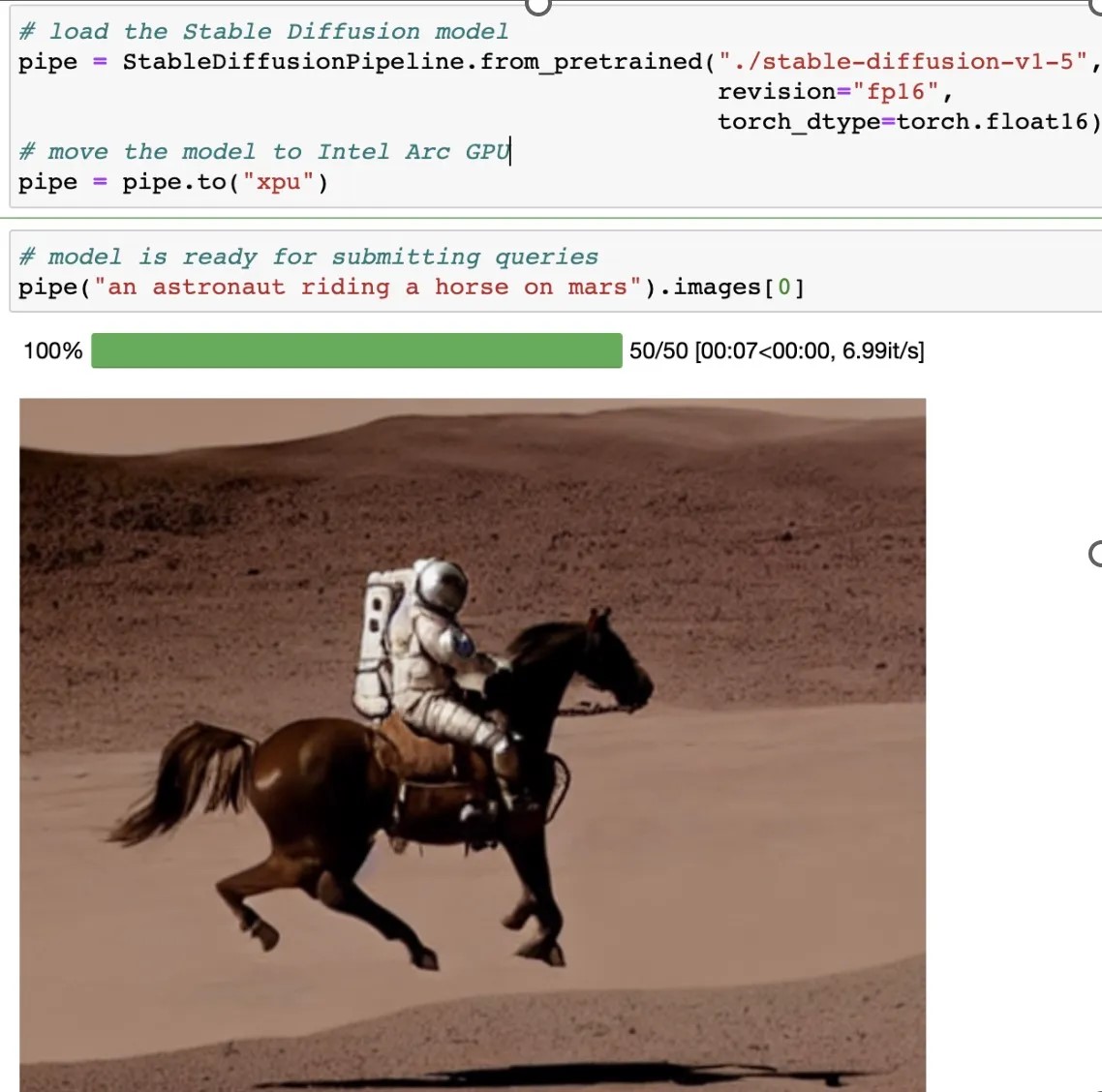

Introducing PyTorch with Intel Integrated Graphics Support on Mac or MacBook: Empowering Personal Enthusiasts : r/pytorch

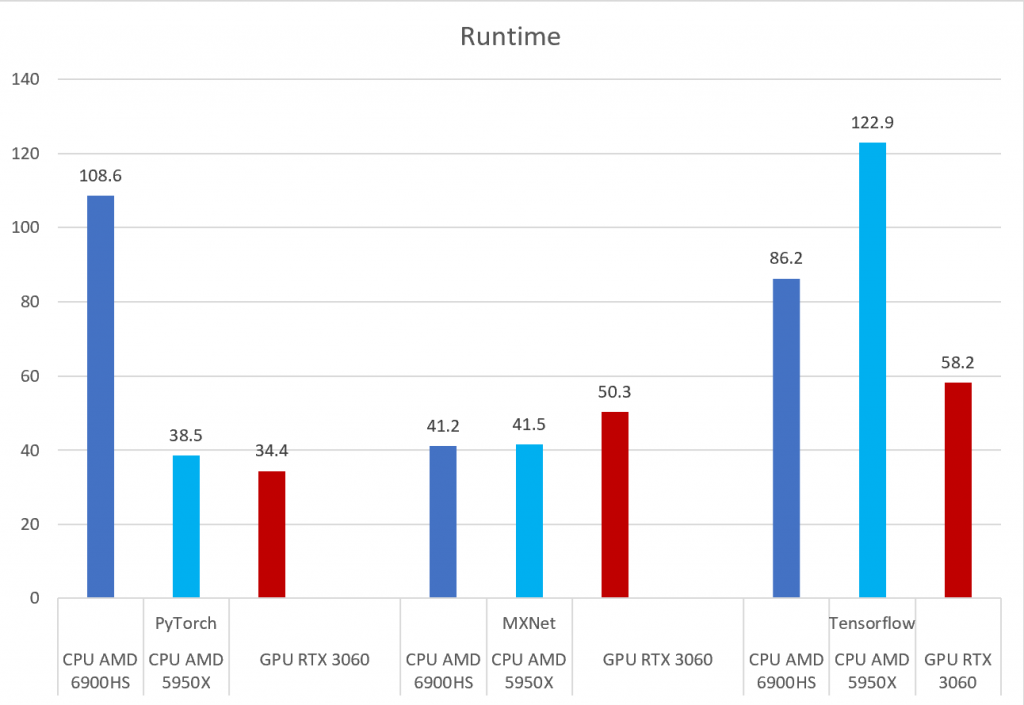

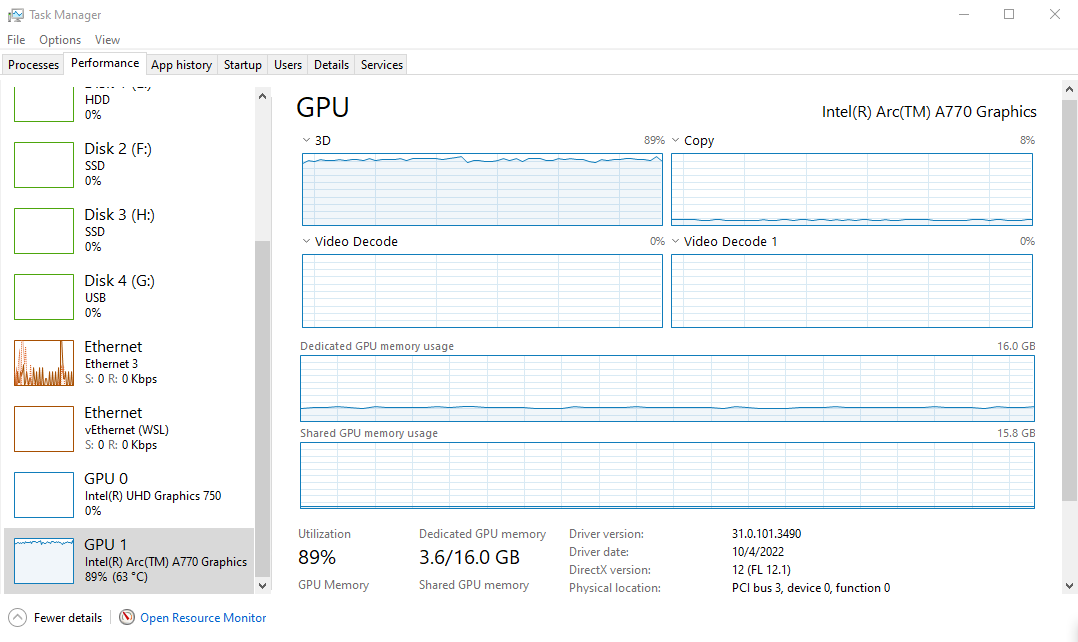

PyTorch, Tensorflow, and MXNet on GPU in the same environment and GPU vs CPU performance – Syllepsis

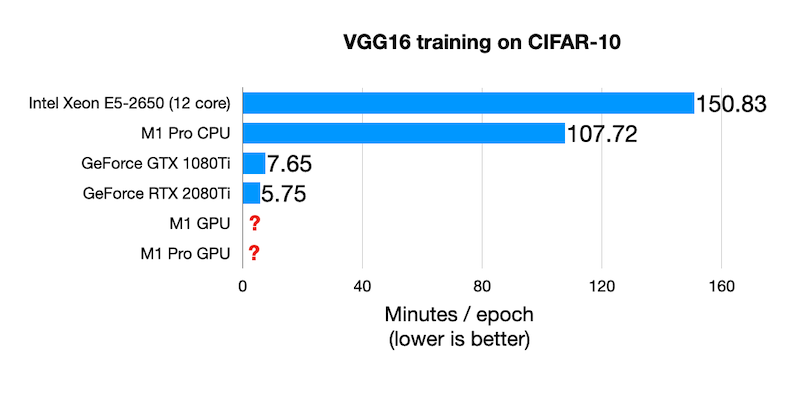

![P] PyTorch M1 GPU benchmark update including M1 Pro, M1 Max, and M1 Ultra after fixing the memory leak : r/MachineLearning P] PyTorch M1 GPU benchmark update including M1 Pro, M1 Max, and M1 Ultra after fixing the memory leak : r/MachineLearning](https://preview.redd.it/5dkat9hoi3191.png?width=2637&format=png&auto=webp&s=dc42ee03167dd3aefbd0319061994bfc2ff24dab)

![D] My experience with running PyTorch on the M1 GPU : r/MachineLearning D] My experience with running PyTorch on the M1 GPU : r/MachineLearning](https://preview.redd.it/p8pbnptklf091.png?width=1035&format=png&auto=webp&s=26bb4a43f433b1cd983bb91c37b601b5b01c0318)